AI Is Changing Home Buying and Renting—But Not Always for the Better

Buying or renting a home has never been easier thanks to technology. You can search listings for homes, submit an application to rent, and find a lender—all online.

But the introduction of artificial intelligence into real estate tools has raised some concerns that this technology could violate fair lending, fair housing, and other consumer protection laws, as well as personal privacy.

Today’s WatchBlog post looks at our two new reports on the use and risks of AI in property technology used to buy or rent homes.

Image

How is AI changing home buying and renting?

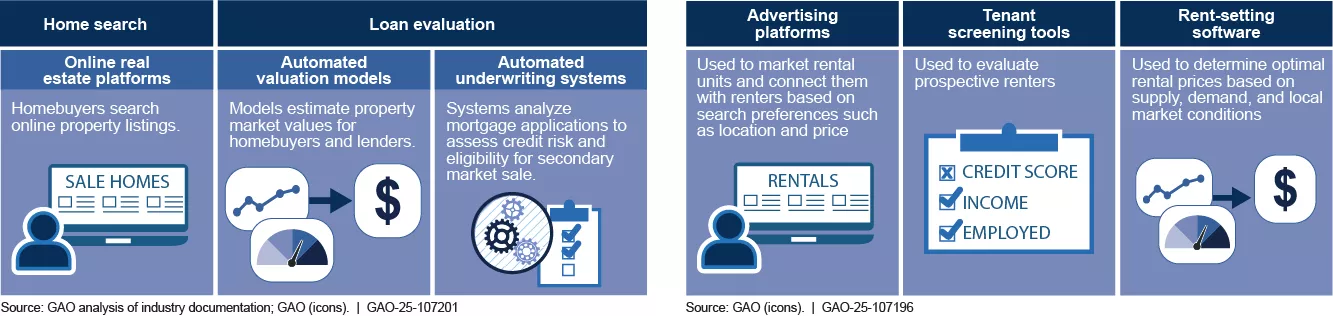

The technologies used to buy or rent a home have simplified the process and reduced costs for many. These technologies aren’t new. Nearly all homebuyers use online platforms—such as Realtor.com, Redfin, and Zillow—to search for homes, according to a 2021 study.

What is new is that these platforms are increasingly using AI to generate search results and other information for users. For example, virtual assistants and chatbots answer homebuyers’ and renters’ questions or deliver search results. Using AI, these tools generate information like personalized listings or financing information. And who among us hasn’t looked up our address (or our neighbor’s) to see what our home value is, or what it might sell or rent for if listed today? These estimates are created using AI, which aggregates and analyzes large volumes of market data to generate the estimate. Fun, right?

Absolutely! But the danger comes if these technologies—such as chatbots and other AI-assisted tools—steer homebuyers and renters to only some listings or neighborhoods. For example, there would be a problem if AI technology didn’t recognize problematic search terms users may input such as terms related to their race, ethnicity, gender, age, or other protected classes. This could result in discrimination that may be illegal under fair housing laws. Online platforms can also increase consumer privacy risk by obtaining potentially sensitive consumer data to personalize product offerings and enhance digital marketing to consumers.

There are other possible dangers with AI use

Mortgage underwriting may also be impacted if AI is incorporated into the systems lenders use to make loan decisions. Today, lenders use AI to review homebuyers’ paperwork like employment and payroll information. But using AI to make loan decisions could make it harder to understand why someone is denied a loan or perpetuate biases in mortgage lending.

Homebuying and Rental Property Technology That Uses AI

Image

AI use may also impact rents. The technologies used to understand home prices is also being used to set rents.

What’s good about that? AI can allow rents to be more responsive to market changes. For example, AI could help owners factor in vacancy and occupancy rates to understand the market and generate more competitive rents for renters.

What’s bad about it? AI could also drive-up rents by setting rates based on, for example, zip code, and not building condition and amenities. It may also reduce renters’ ability to negotiate rents.

Who is monitoring and regulating AI use in home buying and renting?

Several federal agencies are charged with overseeing real estate markets to ensure lenders, landlords, and other real estate players comply with laws meant to protect homebuyers and renters. Several of these agencies have taken some action on these issues, including:

- The Federal Housing Finance Agency has examined some of the products that use AI. This includes automated mortgage underwriting systems and automated valuation models that many lenders use.

- Federal agencies have pursued legal action and obtained settlements to combat alleged misleading and discriminatory advertising on rental platforms.

- Agencies have also taken enforcement actions against companies that screen out tenants based on inaccurate or outdated data.

Some federal agencies are beginning to change how they oversee these products for compliance with fair housing and lending laws. Even so, we think more could be done to oversee such technology and prevent its potential misuse.

To learn more about what we found and recommended to federal agencies about AI use in real estate, check out our new reports on home buying and renting.

- GAO’s fact-based, nonpartisan information helps Congress and federal agencies improve government. The WatchBlog lets us contextualize GAO’s work a little more for the public. Check out more of our posts at GAO.gov/blog.

- Got a comment, question? Email us at blog@gao.gov.

GAO Contacts

Related Products

GAO's mission is to provide Congress with fact-based, nonpartisan information that can help improve federal government performance and ensure accountability for the benefit of the American people. GAO launched its WatchBlog in January, 2014, as part of its continuing effort to reach its audiences—Congress and the American people—where they are currently looking for information.

The blog format allows GAO to provide a little more context about its work than it can offer on its other social media platforms. Posts will tie GAO work to current events and the news; show how GAO’s work is affecting agencies or legislation; highlight reports, testimonies, and issue areas where GAO does work; and provide information about GAO itself, among other things.

Please send any feedback on GAO's WatchBlog to blog@gao.gov.