Foreign Assistance: Agencies Can Improve the Quality and Dissemination of Program Evaluations

Fast Facts

The U.S. government plans to spend about $35 billion on foreign assistance programs in 2017, and program evaluations can help assess and improve the results of this spending.

However, our review of the six agencies providing the most on foreign aid (shown below) found that about a quarter of their program evaluations in 2015 lacked adequate information on results to inform future programs.

We recommended that each agency develop a plan to improve the quality of its evaluations. We also recommended that some agencies improve their procedures to disseminate their evaluation reports—which may help future programs benefit from lessons learned.

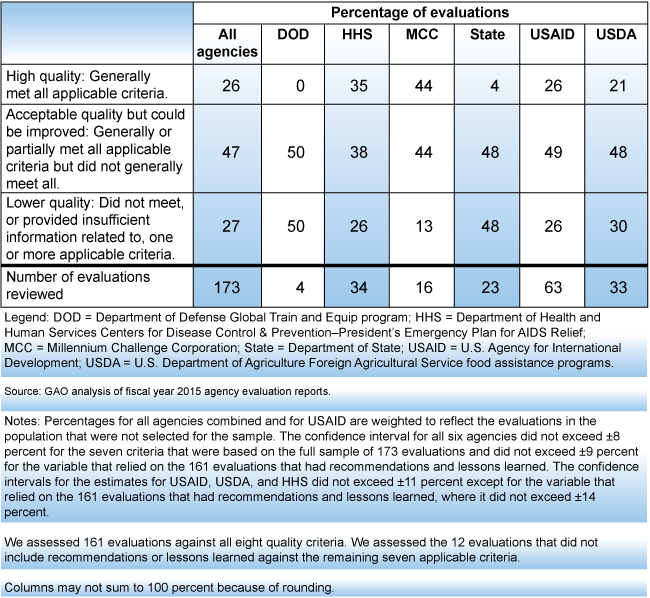

Estimated Percentages of Agency Evaluations Generally or Partially Meeting Applicable Quality Criteria or Not Meeting One or More Criteria

Summary Table of Foreign Assistance Evaluation Quality by Agency

Highlights

What GAO Found

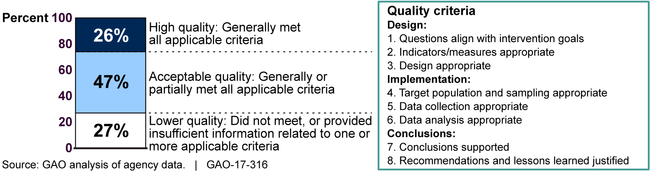

An estimated 73 percent of evaluations completed in fiscal year 2015 by the six U.S. agencies GAO reviewed generally or partially addressed all of the quality criteria GAO identified for evaluation design, implementation, and conclusions (see fig.). Agencies met some elements of the criteria more often than others. For example, approximately 90 percent of all evaluations addressed questions that are generally aligned with program goals and were thus able to provide useful information about program results. About 40 percent of evaluations did not use generally appropriate sampling, data collection, or analysis methods. Although implementing evaluations overseas poses significant methodological challenges, GAO identified opportunities for each agency to improve evaluation quality and thereby strengthen its ability to manage aid funds more effectively based on results.

Estimated Percentage of Foreign Assistance Evaluations Meeting Evaluation Quality Criteria

Note: The confidence intervals for our estimates of the quality of agency evaluations according to these categories did not exceed ±8 percent.

Evaluation costs ranged widely and were sometimes difficult to determine, but the majority of evaluations GAO examined cost less than $200,000. Millennium Challenge Corporation (MCC) evaluations had a median cost of about $269,000, while median costs for the U.S. Agency for International Development (USAID), the U.S. Department of Agriculture (USDA), and the Department of State (State) ranged from about $88,000 to about $178,000. GAO was unable to identify the specific costs for the Department of Defense (DOD) and Department of Health and Human Services (HHS) evaluations. High-quality evaluations tend to be more costly, but some well-designed lower-cost evaluations also met all quality criteria. Other factors related to evaluation costs include the evaluation's choice of methodology, its duration, and its location.

Agencies generally posted and distributed evaluations for the use of internal and external stakeholders. However, shortfalls in some agency efforts may limit the evaluations' usefulness.

- Public posting. USDA has not developed procedures for reviewing and preparing its evaluations for public posting, but the other agencies posted nonsensitive reports on a public website.

- Timeliness. Some HHS reports and more than half of MCC reports were posted a year or more after completion.

- Dissemination planning. State does not currently have a policy requiring a plan that identifies potential users and the means of dissemination.

Why GAO Did This Study

The U.S. government plans to spend approximately $35 billion on foreign assistance in 2017. Evaluation is an essential tool for U.S. agencies to assess and improve the results of their programs. Government-wide guidance emphasizes the importance of evaluation, and the Foreign Aid Transparency and Accountability Act of 2016 requires the President to establish guidelines for conducting evaluations. However, evaluations can be challenging to conduct. GAO has previously reported on challenges in the design, implementation, and dissemination of the evaluations of individual foreign assistance programs.

GAO was asked to review foreign aid evaluations across multiple agencies. This report examines the (1) quality, (2) cost, and (3) dissemination of foreign aid program evaluations. GAO assessed a representative sample of 173 fiscal year 2015 evaluations for programs at the six agencies providing the largest amounts of U.S. foreign aid —USAID, State, MCC, HHS's Centers for Disease Control and Prevention under the President's Emergency Plan for AIDS Relief, USDA's Foreign Agricultural Service, and DOD's Global Train and Equip program—against leading evaluation quality criteria; analyzed cost and contract documents; and reviewed agency websites and dissemination procedures.

Recommendations

GAO recommends that each of the six agencies develop a plan to improve the quality of its evaluations and that HHS, MCC, State, and USDA improve their procedures and planning for disseminating evaluation reports.

The agencies concurred with our recommendations.

Recommendations for Executive Action

| Agency Affected | Recommendation | Status |

|---|---|---|

| Millennium Challenge Corporation | To improve the reliability and usefulness of program evaluations for agency program and budget decisions, the Chief Executive Officer of MCC, the Administrator of USAID, the Secretary of Agriculture, the Secretary of Defense, the Secretary of State, and the Secretary of Health and Human Services (in cooperation with State's Office of the U.S. Global AIDS Coordinator and Health Diplomacy) should each develop a plan for improving the quality of evaluations for the programs included in our review, focusing on areas where our analysis has shown the largest areas for potential improvement. |

We found that the majority of MCC's fiscal year 2015 evaluations met the 8 quality criteria we developed. However, statements of independence were the largest area of potential improvement we found for MCC in our analysis; none of the evaluations we reviewed discussed conflicts of interest. In a March 2017 letter provided to GAO, MCC stated that it had responded to our finding that MCC needed to more clearly document the independence of its evaluators and fully disclose any potential conflicts of interest in the published final evaluations. In response to this recommendation, MCC revised its standard evaluator contract language to require the evaluator's independence, and any potential conflicts of interest, to be fully documented in the published evaluation report. In August 2019, MCC provided us with a copy of the updated independence statement for the 8 most recent evaluation reports. Though some of the reports had been placed under contract before an independence statement was required, 7 of the 8 included an independence statement in keeping with MCC guidance.

|

| Department of Agriculture | To improve the reliability and usefulness of program evaluations for agency program and budget decisions, the Chief Executive Officer of MCC, the Administrator of USAID, the Secretary of Agriculture, the Secretary of Defense, the Secretary of State, and the Secretary of Health and Human Services (in cooperation with State's Office of the U.S. Global AIDS Coordinator and Health Diplomacy) should each develop a plan for improving the quality of evaluations for the programs included in our review, focusing on areas where our analysis has shown the largest areas for potential improvement. |

In March 2017, GAO reported that about one-third of the U.S. Department of Agriculture (USDA) evaluations prepared by the agency in 2015 were of lower quality--not meeting or providing insufficient information related to one or more applicable quality criteria that GAO identified. GAO recommended that USDA develop a plan for improving the quality of evaluations for the programs included in our review, focusing on areas where our analysis showed the largest areas for potential improvement. In June 2020, USDA provided GAO with a copy of its International Food Assistance Division Evaluation Quality Checklist, which incorporates a number of elements derived from our review. The checklist requires that draft evaluations be reviewed against specific criteria, including elements of the evaluation's design, methodology (including sampling and data reliability), credibility of its findings, and appropriateness of its conclusions and recommendations. The checklist further notes that any deficiencies require follow up with the evaluator and the implementing partner before the draft evaluation report is approved. Ensuring high-quality evaluations will help the agency identify successful programs to expand or pitfalls to avoid and continuously improve its foreign assistance programs.

|

| Department of Defense | To improve the reliability and usefulness of program evaluations for agency program and budget decisions, the Chief Executive Officer of MCC, the Administrator of USAID, the Secretary of Agriculture, the Secretary of Defense, the Secretary of State, and the Secretary of Health and Human Services (in cooperation with State's Office of the U.S. Global AIDS Coordinator and Health Diplomacy) should each develop a plan for improving the quality of evaluations for the programs included in our review, focusing on areas where our analysis has shown the largest areas for potential improvement. |

DOD partially concurred with the recommendation, noting that in many cases, certain methodologies are not well suited for security assistance evaluation. However, DOD stated that it would diligently review the recommendations and best practices among the assessment, monitoring, and evaluation professional community to determine which characteristics are best suited for the unique security sector assessment mission. In January 2017, DOD established a policy on assessment, monitoring, and evaluation (AM&E) for security cooperation with the aim of improving the quality of program evaluation across DOD. In addition, in May 2018 we reported (GAO-18-449) that DOD is developing an enhanced assessment process that includes increased staffing dedicated to monitoring and evaluation. For example, DOD officials said that they had hired several full-time contractors to perform key tasks related to monitoring and evaluation. According to the officials, several full-time contractor positions will be located in the various geographic combatant command locations, with responsibilities to develop baseline assessments and oversee the quality and completeness of those assessments; develop performance indicators and performance plans; conduct monitoring activities and provide reports to DOD; and conduct annual, independent evaluations of a few Global Train and Equip projects in detail. In addition, DOD officials stated that they had hired a full-time contractor who will be based at headquarters and provide further support for each geographic combatant command and who will be charged with documenting that baseline assessments were completed and conducting quality reviews of assessment-related documents.

|

| Department of Health and Human Services | To improve the reliability and usefulness of program evaluations for agency program and budget decisions, the Chief Executive Officer of MCC, the Administrator of USAID, the Secretary of Agriculture, the Secretary of Defense, the Secretary of State, and the Secretary of Health and Human Services (in cooperation with State's Office of the U.S. Global AIDS Coordinator and Health Diplomacy) should each develop a plan for improving the quality of evaluations for the programs included in our review, focusing on areas where our analysis has shown the largest areas for potential improvement. |

In March 2017, GAO reported that about one-quarter of the President's Emergency Plan for AIDS Relief (PEPFAR) evaluations prepared by the Department of Health and Human Services (HHS)' Centers for Disease Control and Prevention (CDC) in 2015 were of lower quality--not meeting or providing insufficient information related to one or more applicable quality criteria that GAO identified. GAO recommended that HHS develop a plan for improving the quality of evaluations for the programs included in our review, focusing on areas where our analysis has shown the largest areas for potential improvement. CDC updated the PEPFAR Evaluation Standards of Practice in January 2017 during the period our report was available to CDC for comment. The ESOP provides guidance for evaluation planning, protocol development, implementation, reporting, dissemination, and use of evaluation results. In further response to our recommendation, CDC created checklists to be completed for each evaluation report to assess the evaluation's completeness relevant to quality standards and its adherence to those quality standards. The checklists require an assessment of the appropriateness of its design and methods, implementation, and conclusions -- the three evaluation phases whose quality GAO evaluated. The checklists also include a check that the evaluation's data collection tools are included in an appendix or otherwise made available. Including the data collection tools will help reduce the likelihood that there is insufficient information for an outside observer to assess the quality of the implementation of the evaluation.

|

| Department of State | To improve the reliability and usefulness of program evaluations for agency program and budget decisions, the Chief Executive Officer of MCC, the Administrator of USAID, the Secretary of Agriculture, the Secretary of Defense, the Secretary of State, and the Secretary of Health and Human Services (in cooperation with State's Office of the U.S. Global AIDS Coordinator and Health Diplomacy) should each develop a plan for improving the quality of evaluations for the programs included in our review, focusing on areas where our analysis has shown the largest areas for potential improvement. |

In comments to GAO's draft report, State concurred with the recommendation and said that their planned new policy and toolkit for evaluations constituted a plan going forward. Since the issuance of our report, State has adopted a new and updated policy, guidance document, and toolkit that address areas we identified for potential improvement. In November 2017, State revised its evaluation policy, the Program and Project Design, Monitoring, and Evaluation Policy. State's 2017 Program Design and Performance Management Toolkit includes tools and guidance for developing indicators, an area we identified for potential improvement. The Toolkit also includes examples of how to conduct a data quality assessment. We had previously found that about 17 percent of State evaluation reports had data collection procedures that generally appeared to ensure the quality of the data. The current State template for evaluation reports includes a template for the disclosure of any conflicts of interest; we previously found that only one quarter of State evaluations discussed potential conflicts of interest. The January 2018 Guidance for the Design, Monitoring and Evaluation Policy at the Department of State further requires that State officials review the quality of the methods provided in draft evaluation design and that evaluation reports succinctly describe the evaluation design and data collection methods and their limitations, which could address weaknesses we identified in of State evaluations with regard to data collection and analysis.

|

| U.S. Agency for International Development | To improve the reliability and usefulness of program evaluations for agency program and budget decisions, the Chief Executive Officer of MCC, the Administrator of USAID, the Secretary of Agriculture, the Secretary of Defense, the Secretary of State, and the Secretary of Health and Human Services (in cooperation with State's Office of the U.S. Global AIDS Coordinator and Health Diplomacy) should each develop a plan for improving the quality of evaluations for the programs included in our review, focusing on areas where our analysis has shown the largest areas for potential improvement. |

USAID published the Automated Directive Systems (ADS) Chapter 201 Program Cycle Operational policy in September 2016, and then partially revised this guidance in July 2017 and again in February 2018. The ADS guidance provides considerations, practices, and requirements for evaluating the performance and results of USAID programs. USAID's Deputy Director of its Office of Learning, Evaluation and Research stated that the initial revisions made to this guidance, published in September 2016, were influenced by GAO's ongoing audit work as well USAID's own learning and analysis. The updated guidance includes revised requirements for evaluations in areas where our analysis has shown the largest areas for potential improvement. For example, GAO previously noted that more than half of USAID evaluations did not generally demonstrate the use of data collection methods that ensured data reliability. The revised ADS guidance requires USAID staff to conduct a data quality assessment for performance indicators during project monitoring and requires that evaluators consider data availability and quality during the evaluation planning process. Approximately one-half of USAID evaluations did not discuss whether or not the evaluator had any conflicts of interest. The revised guidance requires that all external evaluation team members provide a signed statement regarding a lack of conflict of interest or describing any existing conflicts of interest. GAO found that about 40 percent of USAID evaluations did not generally demonstrate appropriate target population and sampling, and about 40 percent did not generally demonstrate appropriate data collection. The revised guidance requires that, during evaluation implementation, the methods, main features of data collection instruments, data analysis plans, as well as the key questions are described in a written evaluation design by the evaluators. The revised guidance also requires a peer review of the scope of work of the proposed evaluation to ensure that the scope adheres to USAID requirements and another peer review of the evaluation report itself.

|

| Department of Health and Human Services | To better ensure that the evaluation findings reach their intended audiences and are available to facilitate incorporating lessons learned into future program design or budget decisions, the Secretary of Health and Human Services should direct the Centers for Disease Control and Prevention to update its guidance and practices on the posting of evaluations to require President's Emergency Plan for AIDS Relief (PEPFAR) evaluations to be posted within the timeframe required by PEPFAR guidance. |

In comments on a draft of this report the Centers for Disease Control and Prevention (CDC) agreed with GAO's recommendation that it update its guidance and practices on the posting of evaluations to require the President?s Emergency Plan for AIDS Relief (PEPFAR) evaluations to be posted within the timeframe required by PEPFAR guidance. CDC noted that it was updating its guidance to require that all PEPFAR evaluations be posted online within 90 days, as required by PEPFAR. CDC took this action in January 2017, after our report was made available to them for agency comment and prior to the report's final publication on March 3, 2017.

|

| Millennium Challenge Corporation | To better ensure that the evaluation findings reach their intended audiences and are available to facilitate incorporating lessons learned into future program design or budget decisions, the Chief Executive Officer of MCC should adjust MCC evaluation practices to make evaluation reports available within the timeframe required by MCC guidance. |

In March 2017, GAO reported that the Millennium Challenge Corporation (MCC) did not post some evaluations within the timeframes it required, limiting stakeholders' ability to make optimal use of the evaluation findings. Specifically, we found that MCC did not post 10 of its 16 evaluations within 6 months after MCC received them, and it did not post 8 of these 10 evaluations until a year or more after MCC received them. We recommended that MCC adjust its evaluation practices to make evaluation reports available within the timeframe required by MCC guidance. MCC concurred with the recommendation but noted that its then-forthcoming revised policy on monitoring and evaluation would state that "MCC expects to make each interim and final evaluation report publicly available as soon as practical after receiving the draft report." This revised guidance did not set a specific time frame for the reviews. Nonetheless, in follow-up to our recommendation, we tracked the time MCC required to post evaluations in subsequent years. By fiscal year 2020, MCC improved the timeliness of its posting of evaluation reports and posted 20 of 27 fiscal year 2020 evaluation reports six months or less after the date of the report. By making evaluation reports accessible in a timely manner, MCC will help ensure that interested parties can access the findings of these evaluations in time to incorporate them into program management decisions.

|

| Department of State | To better ensure that the evaluation findings reach their intended audiences and are available to facilitate incorporating lessons learned into future program design or budget decisions, the Secretary of State should amend State's evaluation policy to require the completion of dissemination plans for all agency evaluations |

In comments to GAO's draft report, State concurred with the recommendation and said that they planned to update their policy. In November 2017, State revised its evaluation policy -- Program and Project Design, Monitoring, and Evaluation Policy -- to require State bureaus and independent offices to develop evaluation dissemination plans. These plans are to delineate all stakeholders and ensure that potential users of the evaluation will receive copies or have ready access to them .

|

| Department of Agriculture | To better ensure that the evaluation findings reach their intended audiences and are available to facilitate incorporating lessons learned into future program design or budget decisions, the Secretary of Agriculture should implement guidance and procedures for making FAS evaluations available online and searchable on a single website that can be accessed by the general public. |

In March 2017, GAO reported that the U.S. Department of Agriculture (USDA) did not publicly post any of the 38 nonsensitive evaluations we reviewed. At that time, USDA stated that USDA was in the process of developing procedures for making nonsensitive evaluations public, which would include reviewing the documents to ensure that they did not contain, for example, personally identifiable or proprietary information. We recommended that USDA implement guidance and procedures for making FAS evaluations available online and searchable on a single website that can be accessed by the general public. In June 2020, USDA provided GAO with a copy of its new standard operating procedure (SOP) for publishing evaluations. The SOP establishes guidance to ensure that evaluations are reviewed and prepared for publication, and finalized evaluations are uploaded on the U.S. Agency for International Development (USAID) Development Experience Clearinghouse (DEC) platform. The SOP includes the procedures for uploading the evaluation to DEC and requires that potential concerns with personally identifiable information be addressed. For example, the SOP requires that an evaluation's terms of reference state that the evaluation should not include personally identifiable information and requires any potential instances of such information to be flagged during review of the draft evaluation. By taking steps to ensure improved access to these evaluations, USDA will enable stakeholders to make better-informed decisions about future program design and implementation.

|